check_input bool, default=Trueĭon’t use this parameter unless you know what you’re doing. Ignored if they would result in any single class carrying a Ignored while searching for a split in each node. That would create child nodes with net zero or negative weight are

#DECISION TREE ENTROPY HOW TO#

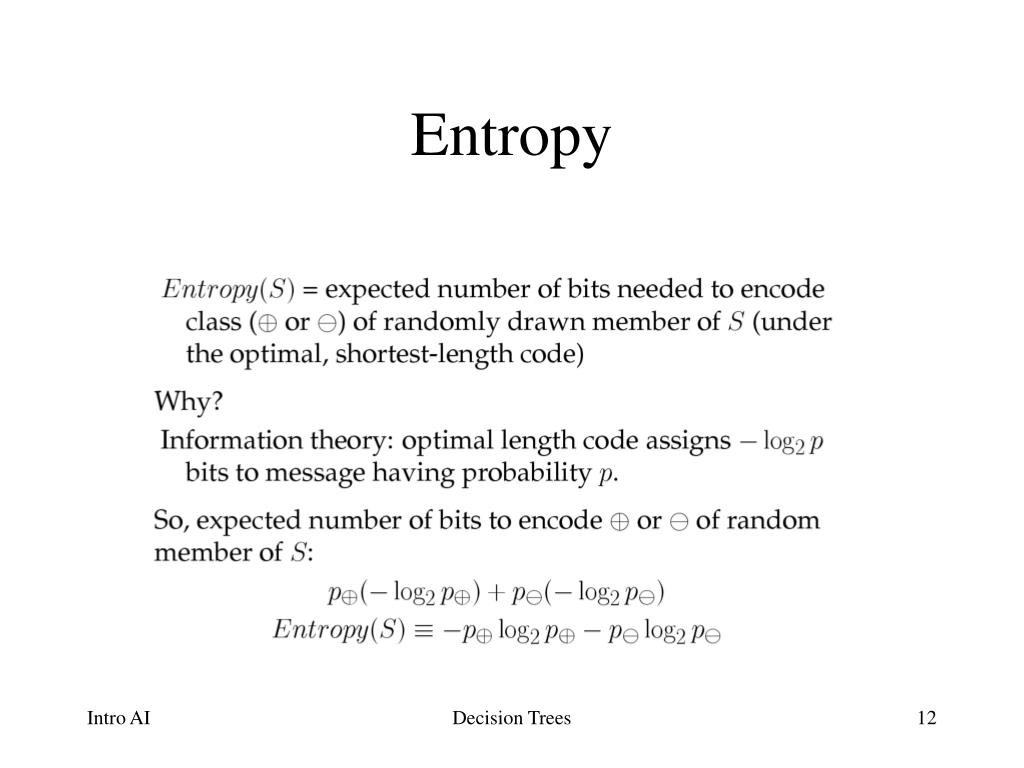

Pandas has a map () method that takes a dictionary with information on how to convert the values. We have to convert the non numerical columns Nationality and Go into numerical values. To make a decision tree, all data has to be numerical. If None, then samples are equally weighted. df pandas.readcsv ('data.csv') print(df) Run example ». sample_weight array-like of shape (n_samples,), default=None Entropy, Information gain, and Gini Index the crux of a Decision Tree Where P(xk) is the probability that a target feature takes a specific value, k. The target values (class labels) as integers or strings. Source Consider a dataset with N classes. The image below gives a better description of the purity of a set. It determines how a decision tree chooses to split data. y array-like of shape (n_samples,) or (n_samples, n_outputs) Decision Tree: Another Example Deciding whether to play or not to play Tennis on a Saturday A binary classi cation problem (play vs no-play) Each input (a Saturday) has 4 features: Outlook, Temp. Entropy is an information theory metric that measures the impurity or uncertainty in a group of observations. In this formalism, a classification or regression decision tree is used as a predictive model to draw conclusions about a set of observations. Internally, it will be converted toĭtype=np.float32 and if a sparse matrix is provided Decision tree learning is a supervised learning approach used in statistics, data mining and machine learning. This is really an important concept to get, in order to fully understand decision trees. Parameters : criterion of shape (n_samples, n_features) A decision tree is a decision support hierarchical model that uses a tree-like model of decisions and their possible consequences, including chance event outcomes, resource costs, and utility.It is one way to display an algorithm that only contains conditional control statements. Evaluating the entropy is a key step in decision trees, however, it is often overlooked (as well as the other measures of the messiness of the data, like the Gini coefficient). DecisionTreeClassifier ( *, criterion = 'gini', splitter = 'best', max_depth = None, min_samples_split = 2, min_samples_leaf = 1, min_weight_fraction_leaf = 0.0, max_features = None, random_state = None, max_leaf_nodes = None, min_impurity_decrease = 0.0, class_weight = None, ccp_alpha = 0.0 ) ¶

0 kommentar(er)

0 kommentar(er)